Coding4Fun Drone 🚁 posts

- Introduction to DJI Tello

- Analyzing Python samples code from the official SDK

- Drone Hello World ! Takeoff and land

- Tips to connect to Drone WiFi in Windows 10

- Reading data from the Drone, Get battery level

- Sample for real time data read, Get Accelerometer data

- How the drone camera video feed works, using FFMPEG to display the feed

- Open the drone camera video feed using OpenCV

- Performance and OpenCV, measuring FPS

- Detect faces using the drone camera

- Detect a banana and land!

- Flip when a face is detected!

- How to connect to Internet and to the drone at the same time

- Video with real time demo using the drone, Python and Visual Studio Code

- Using custom vision to analyze drone camera images

- Drawing frames for detected objects in real-time in the drone camera feed

- Save detected objects to local files, images and JSON results

- Save the Drone camera feed into a local video file

- Overlay images into the Drone camera feed using OpenCV

- Instance Segmentation from the Drone Camera using OpenCV, TensorFlow and PixelLib

- Create a 3×3 grid on the camera frame to detect objects and calculate positions in the grid

- Create an Azure IoT Central Device Template to work with drone information

- Create a Drone Device for Azure IoT Central

- Send drone information to Azure IoT Central

- Using GPT models to generate code to control the drone. Using ChatGPT

- Generate code to control the 🚁 drone using Azure OpenAI Services or OpenAI APIs, and Semantic Kernel

Hi!

In my previous post, I wrote about how we can use ChatGPT to generate Python code to control a drone.

Today, we are going to use Azure OpenAI Services or OpenAI APIs to generate the same code using GPT models.

We are going to use the same prompt that we had for ChatGPT.

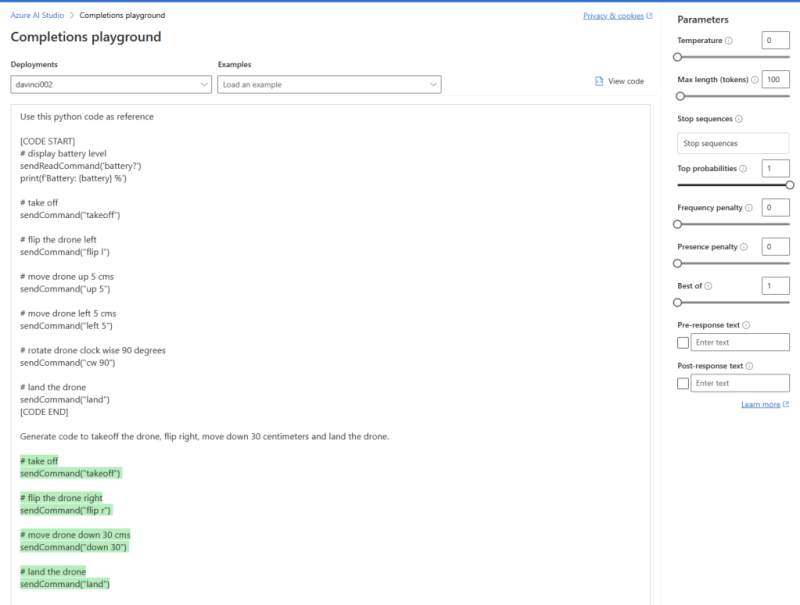

Use this python code as reference

[CODE START]

# display battery level

sendReadCommand('battery?')

print(f'Battery: {battery} %')

# take off

sendCommand("takeoff")

# flip the drone left

sendCommand("flip l")

# move drone up 5 cms

sendCommand("up 5")

# move drone left 5 cms

sendCommand("left 5")

# rotate drone clock wise 90 degrees

sendCommand("cw 90")

# land the drone

sendCommand("land")

[CODE END]

Generate code to takeoff the drone, flip right, move down 30 centimeters and land

Running this prompt in Azure OpenAI Studio generates the correct Python code:

Both Azure OpenAI Services and OpenAI APIs have amazing SDKs, however, I’ll use semantic kernel to have a single code base that can use both.

_ Note: This is the best way to learn semantic kernel for NET and Python:_

Start learning how to use Semantic Kernel.

Now, let’s create a simple function that:

- Use semantic kernel to generate drone commands

- Use Azure OpenAI Services or OpenAI APIs to generate drone commands

- Use the semantic skill “DroneAI” to generate drone commands

- Return the generated drone commands as a string

Here is the function code:

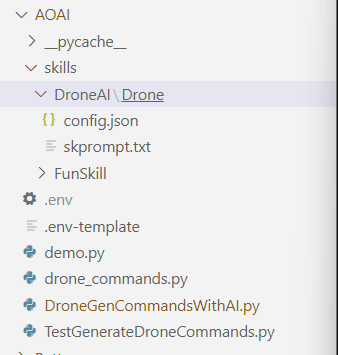

And, I need to have a Skill directory with my DroneAI skill. Something like this:

Here is the config.json content:

{

"schema": 1,

"description": "Generate commands to control the drone",

"type": "completion",

"completion": {

"max_tokens": 1000,

"temperature": 0.0,

"top_p": 1.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

},

"input": {

"parameters": [

{

"name": "input",

"description": "commands for the drone",

"defaultValue": ""

}

]

}

}

And the skprompt.txt:

Use this python code as reference

[CODE START]

# display battery level

sendReadCommand('battery?')

print(f'Battery: {battery} %')

# take off

sendCommand("takeoff")

# flip the drone left

sendCommand("flip l")

# move drone up 5 cms

sendCommand("up 5")

# move drone left 5 cms

sendCommand("left 5")

# rotate drone clock wise 90 degrees

sendCommand("cw 90")

# land the drone

sendCommand("land")

[CODE END]

Generate python code only to follow these orders

{{$input}}

+++++

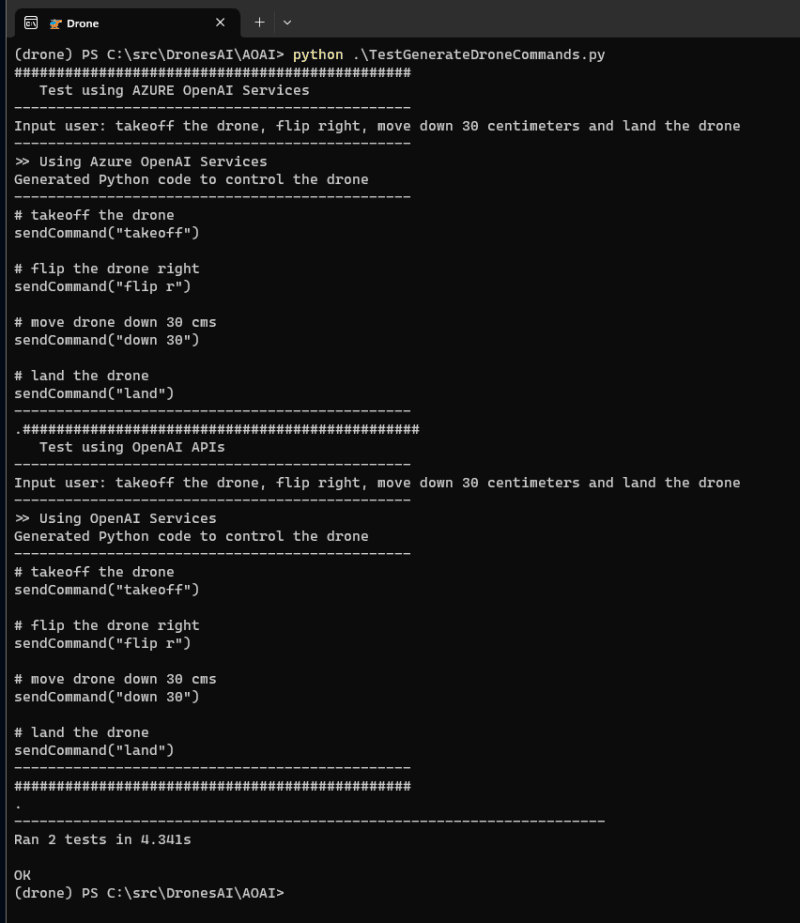

And that’s it! We can test this function with 2 unit tests for for platforms:

import unittest

from DroneGenCommandsWithAI import generate_drone_commands

class TestGenerateDroneCommands(unittest.TestCase):

test_command = "takeoff the drone, flip right, move down 30 centimeters and land the drone"

def test_generate_drone_commandsusing_openAI(self):

print('###############################################')

print(' Test using OpenAI APIs')

commands = generate_drone_commands(self.test_command)

# validate if the generated string contains the drone commands

self.assertIn('takeoff', commands)

self.assertIn('flip r', commands)

self.assertIn('down 30', commands)

self.assertIn('land', commands)

print('###############################################')

def test_generate_drone_commandsusing_azureOpenAI(self):

print('###############################################')

print(' Test using AZURE OpenAI Services')

commands = generate_drone_commands(self.test_command, True)

# validate if the generated string contains the drone commands

self.assertIn('takeoff', commands)

self.assertIn('flip r', commands)

self.assertIn('down 30', commands)

self.assertIn('land', commands)

if __name__ == ' __main__':

unittest.main()

And, they both works!

In the next post we will review some options and configurations necessary for the correct code generation.

Happy coding!

Greetings

El Bruno

More posts in my blog ElBruno.com.

More info in https://beacons.ai/elbruno