As a developer, I'm always on the lookout for new tools and techniques that can streamline my workflow and save me time. Recently, I decided to give ChatGPT a try for web automation.

My goal was to extract data from HackerNews using ChatGPT, so I fed it a series of prompts and hoped it would generate the appropriate regex for me.

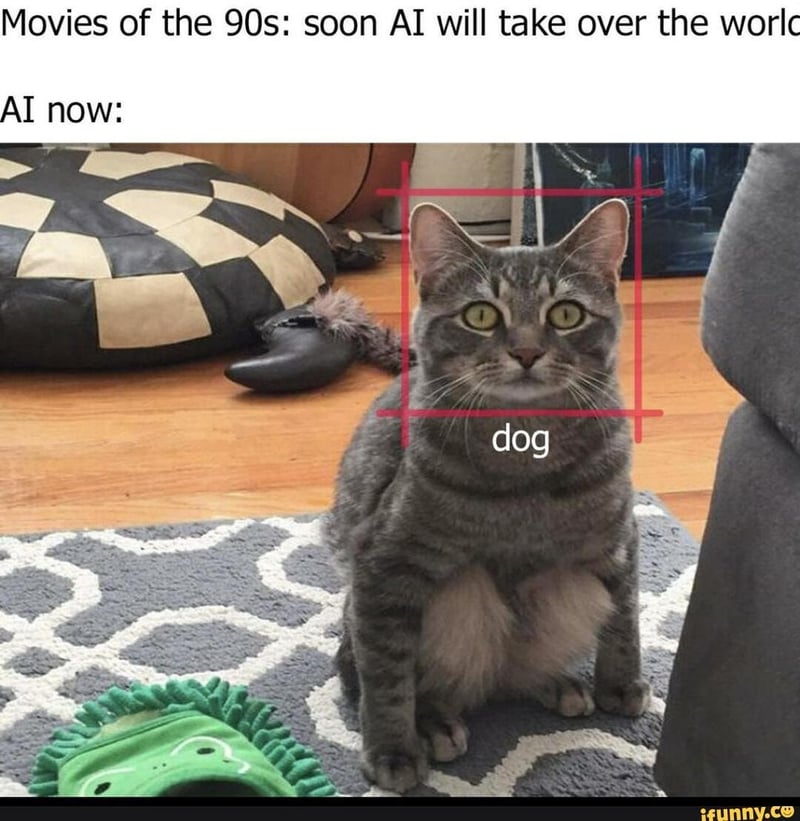

Oh! I was so wrong!

Unfortunately, despite its impressive capabilities and hype, ChatGPT ultimately failed to deliver the goods.

While it did generate some text that sounded like it might be on the right track, it didn't actually solve my problem. This was both good news and bad news.

On the one hand, it showed that ChatGPT was learning and adapting to meet my needs. On the other hand, it didn't actually get the job done.

In the end, I decided to take matters into my own hands and started adjusting the generated regex myself. It took some trial and error, but eventually, I was able to get it working.

Steps I have taken

I told ChatGPT that it should become a regex generator and the output should be in JavaScript.

I simply copied and pasted the text from HackerNews.

Provide ChatGPT with the desired input and output for the regex.

I received a sample output from ChatGPT, and it sounded like it was absolutely accurate.

To validate it, I took it to regex101, which would highlight everything for me.

It looks like it worked, except for one result. So I went back to ChatGPT and provided more information.

But instead of fixing it, it just broke the whole regex. Everything, including time, is now broken.

I continued to iterate on this process, using ChatGPT’s output as a starting point and making manual adjustments until the desired regex was obtained.

At this point, this process needed a lot of tries, which used up my precious credits that I could spend on other things.

¯_(ツ)_/¯

So I decided to take matters into my own hands and tweak the regex myself.

The final regex was this, which worked for most stories.

^(?<rank>\d+)\.\s+(?<title>.+)\s+\((?<site>.+)\)\s+(?<points>\d+)?\s+points?\s+by\s+(?<author>\S+)?\s+(?<time>.+?)\s+\|\s+hide(\s+\|\s+(?<comments>\d+)\s+comments)?

Conclusion of the experiment

Even though there are hundreds of other ways to grab the data from HackerNews, using AI to extract data from a blob of text was an interesting challenge to try with ChatGPT. But this experiment just told me one thing.

Do not blindly trust GPT and variants. It can give you incorrect data with very high confidence and your precious app will be broken in no time.

Okay, I could have given it better input, but keep in mind that I tried this process many times with different input. Meanwhile, it kept adding big blobs of text to the response, even when I didn’t need them. I've noticed that it frequently adds large blobs of text despite my requests.

For example, this prompt specifically mentions avoiding providing explanation, but who cares?

What does ChatGPT have to say about this?

I told ChatGPT about the above situation, and it sympathized with me.

I’m glad you found ChatGPT to be a helpful tool in your workflow, even if it didn’t quite solve the problem you were working on. It’s important to remember that large language models like ChatGPT, while extremely powerful and versatile, still have limitations when it comes to certain tasks.

In your case, using a large language model for web automation was a novel approach, and it’s not surprising that it didn’t work perfectly on the first try. Web automation can be a complex task that requires a deep understanding of web technologies, as well as specific domain knowledge about the sites and data you are trying to access. In these cases, it’s often best to use specialized tools or rely on your own expertise and understanding of the problem.

That being said, it’s always worth considering whether a large language model could be helpful in solving a specific problem. They can be a powerful tool in the right context, and with the right amount of training and guidance, they can learn and adapt to new tasks and environments. The key is to use them in a way that complements your own skills and knowledge, rather than relying on them as a replacement.

Conclusion

This taught me about ChatGPT's limitations. Even though they can write text that sounds human, do a wide range of tasks, and speak many languages, they may not have the technical expertise to do a specific task as well as a specialized tool or a person with expertise in that area.

My own knowledge and skills helped me solve the problem, not ChatGPT. This shows why language models need human understanding and expertise. Large language models can be helpful, but they shouldn't replace human understanding.

You should not depend too much on ChatGPT.