In the not-so-distant past, dabbling in generative AI technology meant leaning heavily on proprietary models. The routine was straightforward: snag an OpenAI key, and you're off to the races, albeit tethered to a pay-as-you-go scheme. This barrier, however, started to crumble with the introduction of ChatGPT, flinging the doors wide open for any online user to experiment with the technology sans the financial gatekeeping.

Yet, 2023 marked a thrilling pivot as the digital landscape began to bristle with Open Source Models. Reflecting on an interview with Sam Altman, his forecast of a future dominated by a handful of models, with innovation primarily occurring through iterations on these giants, appears to have missed the mark. Despite GPT-4's continued reign at the apex of Large Language Models (LLMs), the Open Source community is rapidly gaining ground.

Among the burgeoning Open Source projects, one, in particular, has captured my attention: Ollama. Contrary to initial impressions, Ollama isn't just another model tossed into the ever-growing pile. Instead, it's a revolutionary application designed to empower users to download and run popular models directly on their local machines, democratizing access to cutting-edge AI technology.

What's the Hype?

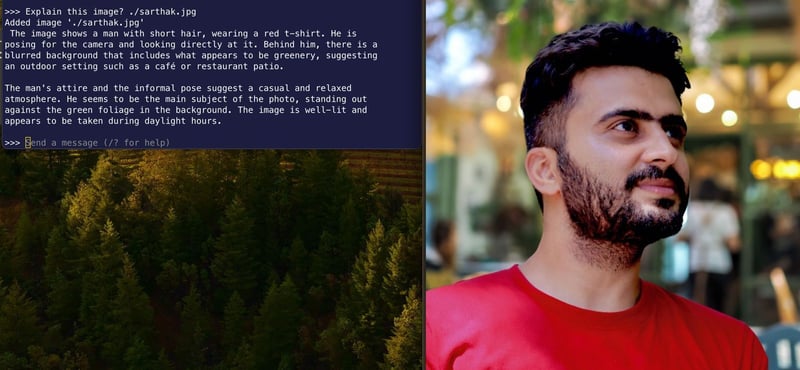

Okay, instead of just telling you, let me show you something.

Download Ollama onto your local machine.

Once that's done, try chatting with the model here:

In case you encounter a CORS error, run this command:

OLLAMA_ORIGINS=* ollama serve

With that, you're now able to chat with the Llama2 Model, in an article, running on your local machine.

Take a look at the code—it's just a simple fetch request:

fetch("http://localhost:11434/api/generate", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "llama2",

prompt: document.querySelector("input").value,

stream: true,

}),

});

llama2 isn't the only Model available. They have a long list of models in their library that you can download and run locally. All you have to do is change model name , model: "llama2", in the fetch request.

Fun right. Now you have powerful models running on your computer (offline).

What's fascinating is that you can run it on any machine with 8GB of RAM (7B models). To run bigger models you might need more RAM.

How This Can Change Everything?

Major players like OpenAI and Cohere offer similar APIs, but there's always a cost associated with them, not to mention the privacy concerns.

Sure, hosting and running these models online is an option, but just imagine the possibilities that come with operating these models offline.

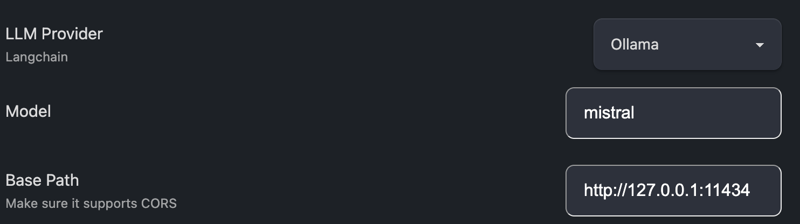

For instance, take a look at this plugin, Text Gen, that you can use in Obsidian. You can set Ollama as your LLM Provider in the settings and specify the model to be used like this:

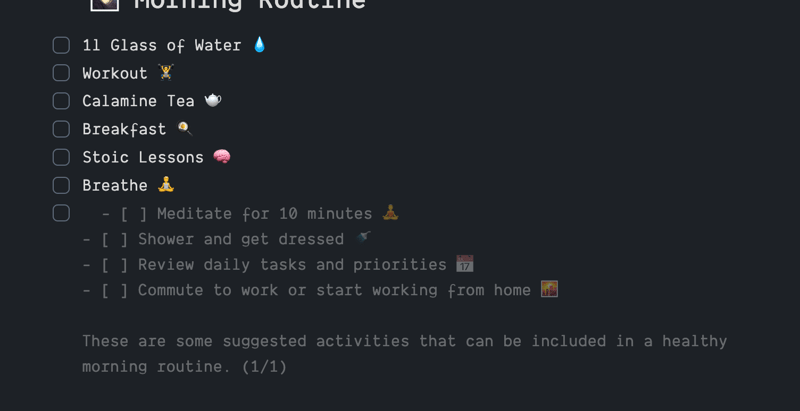

And voilà,

You now have auto-completion in Obsidian, just like that.

Alternatively, you can use Continue as a replacement for GitHub's Copilot. Simply download codellama with Ollama, select Ollama as your LLM provider, and voilà—you've got a local Copilot running on your computer.

I mean, now you can do this...

No internet needed. Zero cost.

Interested in more cool experiments built on Ollama? Here are 10:

And...

Imagine adding an Ollama provider in your project. If the user has Ollama installed, you can provide powerful AI features to your users at no cost.

Conclusion

This is, of course, just the start. The newest versions of Ollama feature some really cool models, such as Gemma, a family of lightweight, state-of-the-art open models built by Google DeepMind that were launched last week.

Or consider the LLava model, which can endow your app with computer vision capabilities.

Ollama is just the beginning, I would say, but it's not the only project embracing this philosophy. There's also an older project called WebLLM that downloads open-source models into your browser cache, allowing you to use them offline.

So, where does it go from here? No one really knows. But if I had to guess, I'd say these models will soon be part of operating systems. I mean, they almost have to be. It's possible that Apple is already working on one—who knows? Their machines certainly have the powerful chips needed. 🤷♂️

Offline LLMs (Large Language Models) will be the future, especially given concerns about privacy and security. Before long, even your mobile devices will be equipped with LLMs. The question is, how will we utilize them? Trust me, chatting is not the only use case; there will be many more. The latest mobile devices like the Samsung Galaxy S24 Ultra have already started taking leverage of AI powered apps, and they're relatively well-integrated into the OS as a whole. My guess is that as time progresses and local LLMs get more powerful, this will further empower our mobile devices in even more novel (and secure) ways.

I would encourage you to try your hand at this technology. You can visit Ollamahub to find different model files and prompt templates to give your local LLMs a twist.

Consider using a library like LangChain to further build upon this technology, adding long-term memory and context awareness. You can even try a low-code setup locally with Flowise.

Isn't this exciting? I'd love to hear what you think! 🌟 Have any cool ideas started bubbling up in your mind? Please, don't hesitate to share your thoughts in the comments below—I'm all ears!

And if you're itching to chat about a potential idea or just need a bit of guidance, why not drop me a line on Twitter @sarthology? I can't wait to connect with you. See you around! 💬✨