In a world where technology is evolving at an astounding pace, the ChatGPT API has emerged as a game-changer, opening up a universe of possibilities for developers. The ChatGPT API makes it possible to integrate the power of human-like artificial intelligence into your application.

In this tutorial, you'll learn how to leverage the ChatGPT API in your application to build a content generation tool that can take requirements for an article from the user and generate an article accordingly.

Implementing the ChatGPT API in Your Application

To start off, you'll need Node.js installed on your local system. This ChatGPT API guide will use Yarn to install dependencies in the project, but you're free to use npm or any other package management tool if you wish. Finally, you'll need an OpenAI account for ChatGPT API access.

If you’re using Pieces, the code snippets used throughout the tutorial are linked in the sentences introducing the code.

Setting Up the Starting App

To help you get started quickly, the frontend for your content generation tool has already been built. You can clone it by running the following command on your local machine:

bash

git clone https://github.com/krharsh17/contentgpt.git

Once you've cloned the project, change your terminal's working directory to the project's root directory by running the following command:

bash

cd contentgpt

Next, install the dependencies required for the project by running the following command:

bash

yarn install

Once the dependencies are installed, you can run the app using the following command:

bash

yarn dev

This is what the UI looks like:

You'll find the complete code for this UI in the pages/index.js file. There are three input fields asking the user to enter the title of the article, its pitch, and a preferred word count. Once the user enters these details and clicks the blue button at the end, the app sends a request to its /api/generate endpoint. This endpoint has not been implemented yet, and this is where you will integrate the ChatGPT API. The app expects the /api/generate endpoint to respond with a JSON object of the following format:

json

{

"content": "<article content here>"

}

You're now ready to integrate the ChatGPT API into your application.

ChatGPT API Integration

There are many ChatGPT API endpoints to help you add AI capabilities to your application. Each ChatGPT API endpoint is meant for a specific use case. For this article, you'll use the Chat endpoint. The Completions endpoint would also fit this use case, but as explained in the GPT documentation, the Chat endpoint is cheaper and offers access to the GPT-3.5 Turbo and GPT-4 APIs, which the Completions endpoint does not.

When working with a large language model (LLM) like GPT, it's very important to phrase your prompts correctly. For the purposes of this article, you'll instruct the model using the following prompts:

json

[

{

"role": "system",

"content": "You are a content generation tool. After the user provides you with the topic name, article length, and pitch of the article, you will generate the article and respond with ONLY the article in Markdown format and no other extra text."

},

{

"role": "user",

"content": "Write an article on the topic <your topic here>. The article should be close to <your word length here> words in length. Here is a pitch describing the contents of the article: <your pitch here>"

}

]

This provides the model with two input messages as prompts. The first message defines its functionality, which is to act as a content generation tool and only respond with the desired output, with no other supporting text like "Sure! Here's your article:" or anything similar that ChatGPT often includes in its responses. This is a system message, meaning it is an internal instruction provided to the model by the application.

The second message asks the model to write the article with the given description. This is a user message, meaning that this is a message given by the user that the model needs to act on (respond to). You can learn more about message roles in this transition guide by OpenAI.

Feel free to modify the prompt to generate more specific responses. The more specific you are with your prompts, the more likely the model will respond with the correct content.

Before you begin writing the API calls to interact with the ChatGPT API, you need to first acquire your OpenAI API key.

Acquiring Your OpenAI API Key

You'll find your OpenAI API key on the API keys page in your OpenAI account's user settings section. If you haven't already created an API key, you need to create one by clicking the Create new secret key button. Once you create a new API key, you can only view it once, so make sure to copy and store it somewhere safe.

Once you have your API key, create a new file called .env in your project and store the key in it in the following format:

bash

OPENAI_API_KEY=<your key here>

You will now be able to access this key on your server environment through process.env.OPENAI_API_KEY.

Using the REST API

You can now proceed to write the logic for using ChatGPT API in your app. To learn how to integrate the Chat endpoint, you'd typically have to consult the API reference for the ChatGPT API. However, to avoid the manual labor of researching the method, you're going to put Pieces Copilot to work.

If you aren't already aware, Pieces is a developer productivity tool that helps you organize and enrich code snippets so you can refer back to them and share them easily. To take your developer productivity up a notch, Pieces recently introduced their copilot, a chatbot that leverages AI and a contextual understanding of your workflow through retrieval augmented generation to help you find solutions and implementations quickly as you develop your app.

To try it out, you first need to set up Pieces. Once you have the Pieces desktop app installed, you can navigate to the Pieces Copilot section by clicking Go To... > Copilot Chats:

You'll be presented with a chat screen. You can now type the following message to ask Pieces Copilot to provide you with the process of integrating ChatGPT API in your Next.js app:

text

How to integrate the ChatGPT REST API in a Next.js app through server functions? Make use of inbuilt packages only and do not install any external dependencies

You'll notice that Pieces Copilot explains the complete process in detail, with instructions to set up both the client and server logic:

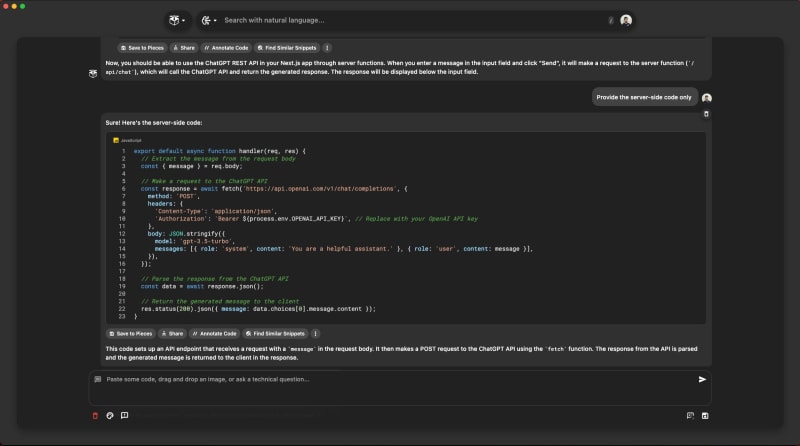

You can further shorten this output by sending the following text as the next message:

text

Provide the server-side code only

You'll notice now that the copilot provides you with just the right server-side code to help set up the integration in your demo app:

With some modifications, this is how the final code snippet should look:

js

export default function handler(req, res) {

const {

title, purpose, length

} = JSON.parse(req.body)

const baseURL = "https://api.openai.com/v1"

const endpoint = "/chat/completions"

const apiKey = process.env.OPENAI_API_KEY

const requestBody = {

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a content generation tool. After the user provides you with the topic name, article length, and pitch of the article, you will generate the article and respond with ONLY the article in Markdown format and no other extra text."

},

{

"role": "user",

"content": "Write an article on the topic \"" + title + "\". The article should be close to " + length + " words in length. Here is a pitch describing the contents of the article: " + purpose

}

],

max_tokens: length * 1.5

}

fetch(baseURL + endpoint, {

method: "POST",

body: JSON.stringify(requestBody),

headers: {

"Authorization": "Bearer " + apiKey,

"Content-Type": 'application/json'

}

}).then(r => r.json())

.then(r => {

console.log(r)

res.status(200).json({content: r.choices[0].message.content})

})

.catch(e => {

console.log(e)

res.status(500).json({content: "The article could not be generated. Error: " + JSON.stringify(e)})

})

}

You'll need to save this snippet in the pages/api/generate.js file in your Next.js project. You can now run the app using the yarn dev command, and this is how the tool should run.

Pieces Copilot can do much more than code generation. Use it in your browser to explain code snippets you find online, or in your IDE to help you understand entire repositories. Plus, it leverages both cloud and local LLMs, so you can choose which LLM to use to answer questions and generate code, depending on your security constraints. For enterprise customers, we offer the option of inputting your custom API key into Pieces as well.

Using the Node.js Platform Library

As you saw in the code snippet above, the REST API can be quite cumbersome to manage, and when working on real-world projects, you will most likely need to write a wrapper over the API so you can use it conveniently in your projects. That said, OpenAI offers a range of platform-specific libraries that you can use instead to ease your development process.

For this tutorial, you'll use the Node.js library offered by OpenAI. To install it, run the following command in your project's root directory:

bash

yarn add openai

Next, replace the code in the pages/api/generate.js file with the following snippet:

js

// Import the package

const OpenAI = require("openai")

export default function handler(req, res) {

const {

title, purpose, length

} = JSON.parse(req.body)

// Initialize the package with the API key

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

// The body remains the same as with the REST API

const requestBody = {

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a content generation tool. After the user provides you with the topic name, article length, and pitch of the article, you will generate the article and respond with ONLY the article in Markdown format and no other extra text."

},

{

"role": "user",

"content": "Write an article on the topic \"" + title + "\". The article should be close to " + length + " words in length. Here is a pitch describing the contents of the article: " + purpose

}

],

}

// Instead of making a fetch call, you use the package's method instead

openai.chat.completions.create(requestBody)

.then(completion => {

console.log(completion)

res.status(200).json({content: completion.choices[0].message.content})

})

.catch(e => {

console.log(e)

res.status(500).json({content: "The article could not be generated. Error: " + JSON.stringify(e)})

})

}

You can now restart your dev server and try running the app once again. It should continue to function normally. This is because the integration logic remained the same even though you changed from the REST API to the Node.js package in the implementation. See it in action here.

You've now successfully integrated the ChatGPT API into a content generation app. Feel free to play around with the demo app, try out different GPT models, or add more specificity to your prompts to gain better results. You can find the complete code for the application on the completed branch of this GitHub repo.

Conclusion

This article demonstrated how to use the ChatGPT API in your Next.js app using the REST API and the Node.js SDK. Along the way, you also learned how to use Pieces Copilot to enhance your productivity by quickly generating code through it instead of spending time sifting through ChatGPT API documentation.

Developer productivity is the core focus of all products and services offered by Pieces. You can also try out the Microsoft Teams integration that Pieces offers to enable you to ask code-related questions and generate code right from your Microsoft Teams workspace. Make sure you try out Pieces and save API references in Pieces to stay organized.

If you enjoyed working with the ChatGPT API, we’d love to have you join our Discord community where you can leverage our open source endpoints to build an even more advanced chatbot within your application.